0G Storage: Built for Massive Data

Current storage options force impossible tradeoffs:

- Cloud providers: Fast but expensive with vendor lock-in

- Decentralized options: Either slow (IPFS), limited (Filecoin), or prohibitively expensive (Arweave)

What is 0G Storage?

0G Storage breaks these tradeoffs - a decentralized storage network that's as fast as AWS S3 but built for Web3. Purpose-designed for AI workloads and massive datasets.

New to decentralized storage?

Traditional storage (like AWS):

- One company controls your data

- They can delete it, censor it, or change prices

- Single point of failure

Decentralized storage (like 0G):

- Data spread across thousands of nodes

- No single entity can delete or censor

- Always available, even if nodes go offline

Why Choose 0G Storage?

🚀 The Complete Package

| What You Get | Why It Matters |

|---|---|

| 95% lower costs than AWS | Sustainable for large datasets |

| Instant retrieval | No waiting for critical data |

| Structured + unstructured data | One solution for all storage needs |

| Universal compatibility | Works with any blockchain or Web2 app |

| Proven scale | Already handling TB-scale workloads |

How It Works

0G Storage is a distributed data storage system designed with on-chain elements to incentivize storage nodes to store data on behalf of users. Anyone can run a storage node and receive rewards for maintaining one.

Technical Architecture

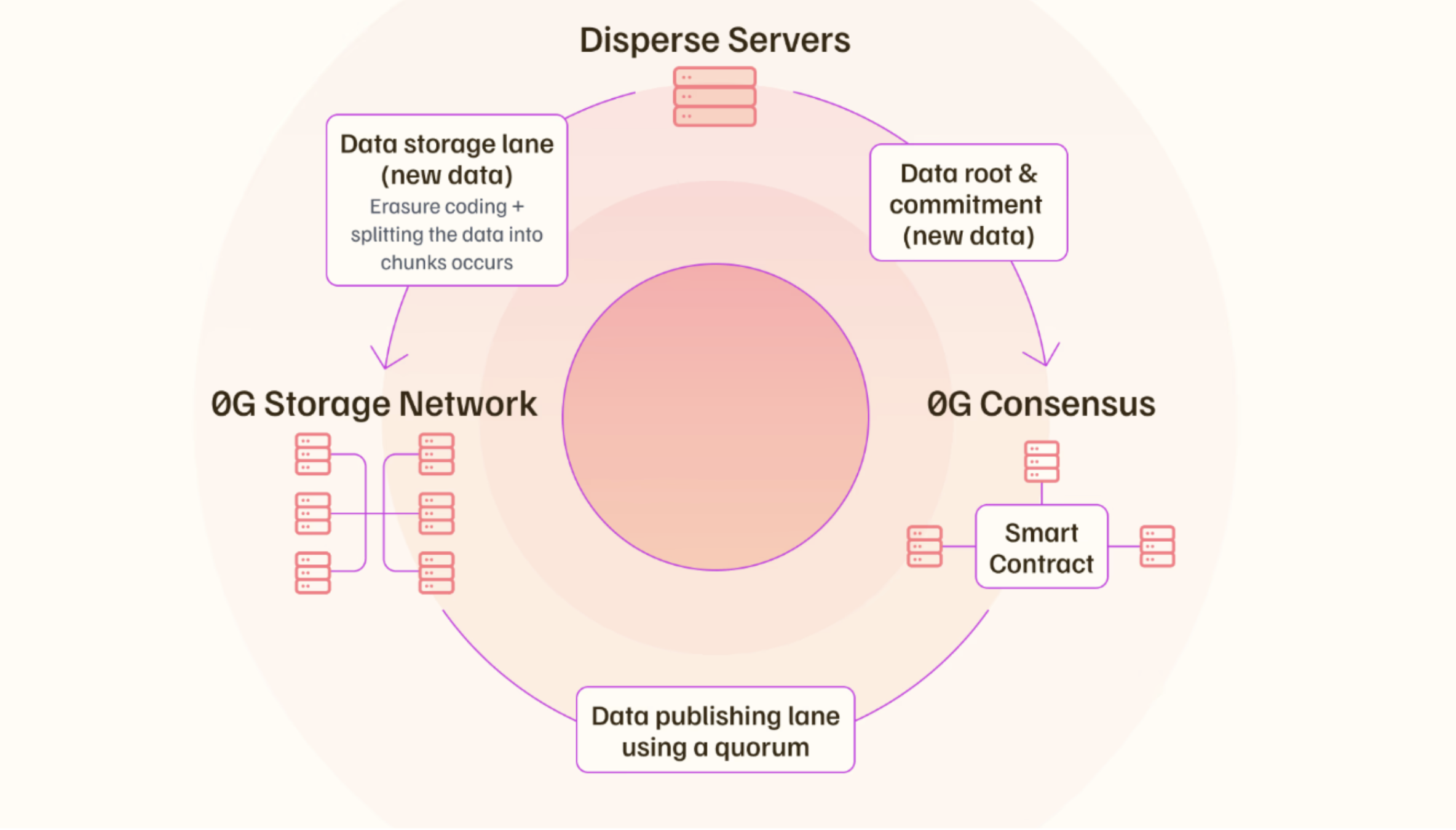

0G Storage uses a two-lane system:

📤 Data Publishing Lane

- Handles metadata and availability proofs

- Verified through 0G Consensus network

- Enables fast data discovery

💾 Data Storage Lane

- Manages actual data storage

- Uses erasure coding: splits data into chunks with redundancy

- Even if 30% of nodes fail, your data remains accessible

- Automatic replication maintains availability

Storage Layers for Different Needs

📁 Log Layer (Immutable Storage)

Perfect for: AI training data, archives, backups

- Append-only (write once, read many)

- Optimized for large files

- Lower cost for permanent storage

Use cases:

- ML datasets

- Video/image archives

- Blockchain history

- General Large file storage

🔑 Key-Value Layer (Mutable Storage)

Perfect for: Databases, dynamic content, state storage

- Update existing data

- Fast key-based retrieval

- Real-time applications

Use cases:

- On-chain databases

- User profiles

- Game state

- Collaborative documents

How Storage Providers Earn

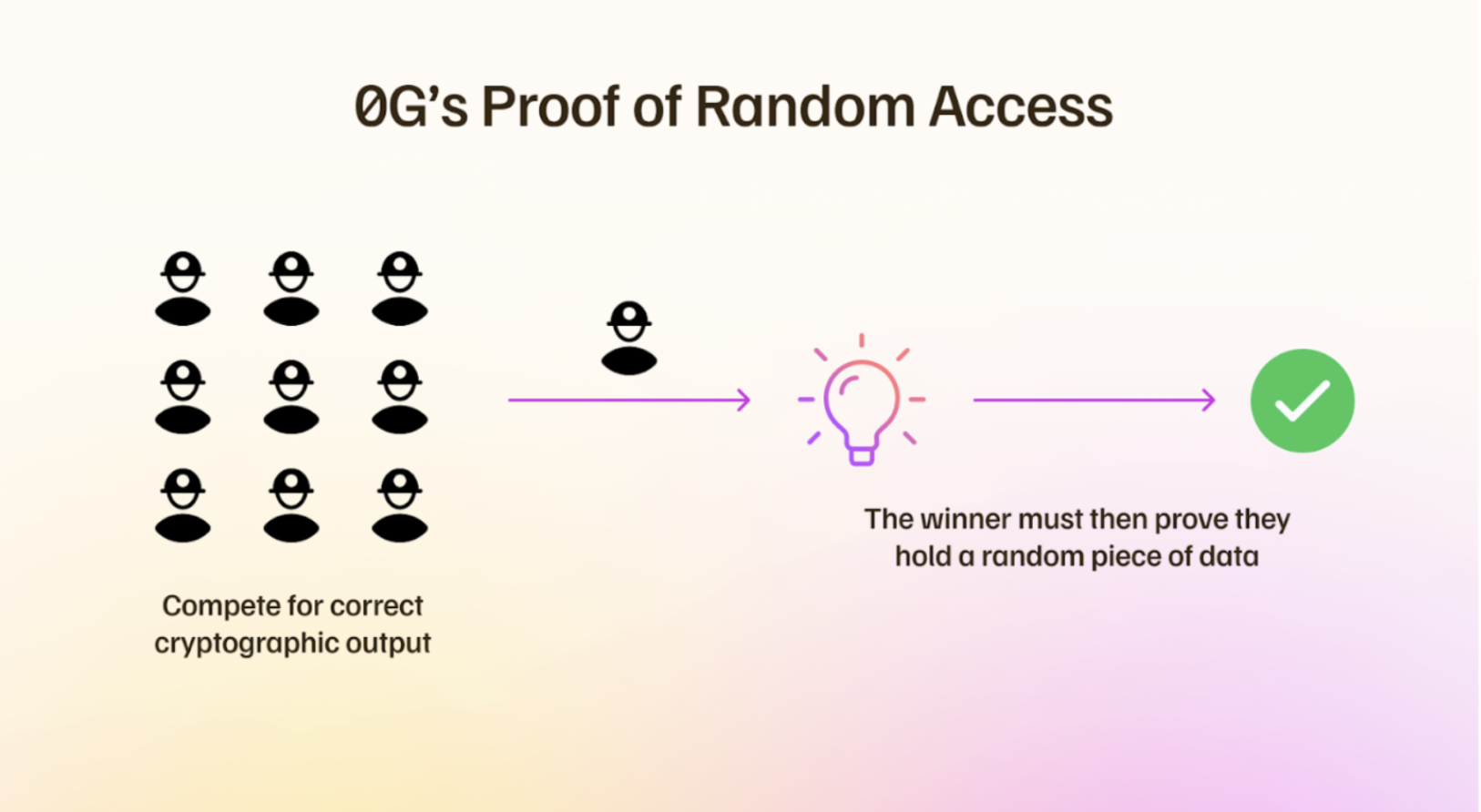

0G Storage is maintained by a network of miners incentivized to store and manage data through a unique consensus mechanism known as Proof of Random Access (PoRA).

How It Works

- Random Challenges: System randomly asks miners to prove they have specific data

- Cryptographic Proof: Miners must generate a valid hash (like Bitcoin mining)

- Quick Response: Must respond fast to prove data is readily accessible

- Fair Rewards: Successful proofs earn storage fees

What's PoRA in simple terms?

Imagine a teacher randomly checking if students did their homework:

- Teacher picks a random student (miner)

- Asks for a specific page (data chunk)

- Student must show it quickly

- If correct, student gets rewarded

This ensures miners actually store the data they claim to store.

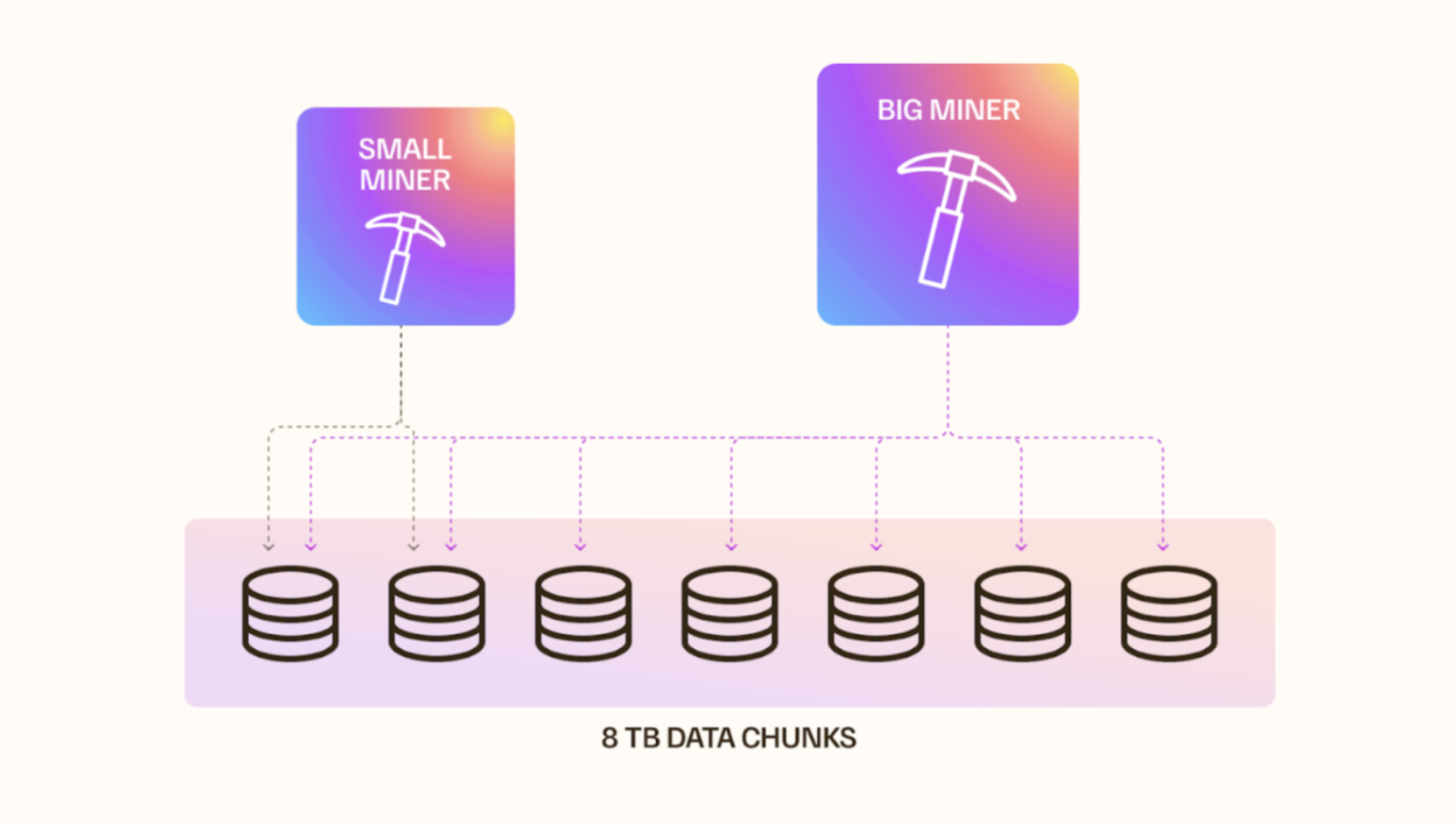

Fair Competition = Fair Reward

To promote fairness, the mining range is capped at 8 TB of data per mining operation.

Why 8TB limit?

- Small miners can compete with large operations

- Prevents centralization

- Lower barrier to entry

For large operators: Run multiple 8TB instances.

For individuals: Focus on single 8TB range, still profitable

How 0G Compares

| Solution | Best For | Limitation |

|---|---|---|

| 0G Storage | AI/Web3 apps needing speed + scale | Newer ecosystem |

| AWS S3 | Traditional apps | Centralized, expensive |

| Filecoin | Cold storage archival | Slow retrieval, unstructured only |

| Arweave | Permanent storage | Extremely expensive |

| IPFS | Small files, hobby projects | Very slow, no guarantees |

0G's Unique Position

- Only solution supporting both structured and unstructured data

- Instant access unlike other decentralized options

- Built for AI from the ground up

Frequently Asked Questions

Is my data really safe if nodes go offline?

Yes! The erasure coding system ensures your data survives node failures. The network automatically maintains redundancy levels, so your data remains accessible even during significant outages.

How fast can I retrieve large files?

- Parallel retrieval from multiple nodes

- Bandwidth limited only by your connection

- 200 MBPS retrieval speed even at network congestion

- CDN-like performance through geographic distribution

What happens to pricing as the network grows?

The network fee is fixed. All pricing is transparent and on-chain, preventing hidden fees or sudden changes.

Can I migrate from existing storage?

Yes, easily:

- Keep existing infrastructure

- Use 0G as overflow or backup

- Gradually migrate based on access patterns

Get Started

🧑💻 For Developers

Integrate 0G Storage in minutes → SDK Documentation

⛏️ For Storage Providers

Earn by providing storage capacity → Run a Storage Node

0G Storage: Purpose-built for AI and Web3's massive data needs.